To enable infinite interactive experiences, it is essential to have 3D scenes with vivid details. However, creating a high-quality 3D scene is often resource-intensive and time-consuming. In some games, this process can even take several years. Facing this, Procedural Content Generation, i.e. PCG, is widely adopted in game development as one solution to ease the work of game developers. By applying a series of human-defined rules and learned patterns repeatedly with some randomness, PCG can create 3D scenes at large scale automatically, and at the same time ensure sufficient diversity across these scenes for less predictable gameplay to increase player engagement. There are a lot of classic games that have adopted PCG to speed-up development and enrich gameplay, such as Rogue (1980), Spore (2008), Minecraft (2011) and No Man's Sky (2016).

Inspired by the fact that PCG is a type of structured language, built upon recent successes of LLM and LLM-based agents, we are happy to announce SceneGenAgent(CityGenAgent), our first milestone towards procedural 3D scene generation. CityGenAgent is the first to enable dialog-based interactive generation and editing of 3D cities, where 3D cities with diverse layout and building configurations and styles can be easily created or manipulated via natural language.

Moreover, these 3D cities are of product-level quality in geometry and texture details, which means they can serve as standard assets in graphic engines like Unreal to further create different gameplays.

Method

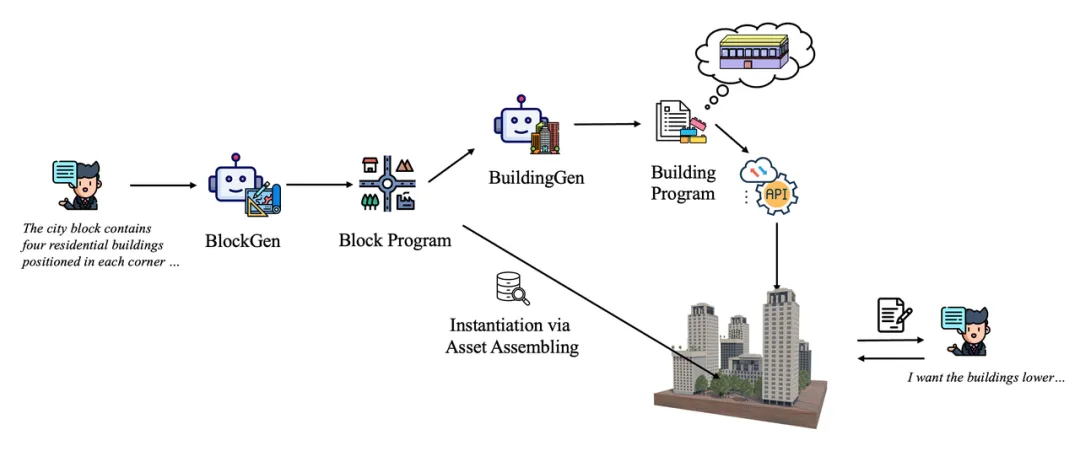

Cities can be large at scale and complex in structure, containing roads, trees, buildings and other decorations. This makes end-to-end modeling of 3D cities neither effective nor efficient. Luckily, rich patterns exist in cities' different blocks, blocks' different buildings and buildings' different floors. Thus our CityGenAgent adopts a hierarchical procedural generation framework, where programs of city blocks and buildings are generated separately with two different LLM sub-agents. For block programs, a BlockGen subagent is trained to understand user instructions and output block programs that are both aesthetically pleasing and logically sound. At this stage, shape and location of each building, and the spatial configuration of road network and decorations are determined. Subsequently, another BuildingGen subagent encodes spatial, geometric and functional constraints conveyed by block programs and user instructions into scalable rule-based building programs. Finally, these block and building programs will be used to assemble atomic assets into the desired 3D city instances. Leveraging these programs as intermediate proxies, users can thus perform real-time adjustments of blocks or buildings at will.

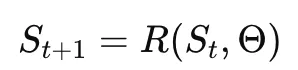

In CityGenAgent, each state update can be expressed by the following formula:

where R denotes one of the rules predefined by human experts or learned from data. Θ represents adjustable parameters. By recursively updating the state based on input conditions, programs with a compatible set of rules and parameters can be automatically generated as:

For example, for the BuildingGen subagent, the rule R can be add_door, which contains parameters like height, width,door_style,etc.

Both the BlockGen subagent and the BuildingGen subagent are finetuned from Qwen-8B. For both subagents, we have collected a small-scale dataset consisting of (user instruction, program) pairs to SFT the vanilla Qwen-8B. After SFT, the BlockGen subagent warms up to capture the spatial relationships of core block elements such as buildings and plants, and establishes semantic alignment between these relationships and user instructions. Similarly for the BuildingGen subagent.

A block consists of 70% residential buildings, 20% commercial buildings, and 10% green spaces evenly distributed throughout the area. The residential buildings are primarily apartment complexes, while the commercial spaces include small retail and office establishments.One example user instruction for block program generation

After warming up, PPO is further applied to finetune these subagents, improving the spatial rationality and visual attraction of their generated programs. Since no closed-form rewards can be calculated directly to measure these factors, in practice corresponding reward models are trained to approximate feedbacks from humans and stronger general LLMs like GPT.

To instantiate programs generated by BlockGen subagent and BuildingGen subagent, we build a customized program executor compatible with our rules and parameters by encapsulating APIs of graphic engines like Blender and Unreal into rule-oriented executor functions. With the help of this program executor, CityGenAgent can thus seamlessly map programs and parameters to 3D buildings and blocks. One particular advantage of the proposed CityGenAgent is its flexibility for extension. Simply by adding more rules, executor functions as well as atomic assets, or upgrading existing ones, CityGenAgent can be substantially enriched without any retraining.

Looking Ahead

Building on CityGenAgent, we further enrich its diversity in program structure and visual style. We also expand CityGenAgent into SceneGenAgent, capable of generating not just cities, but also wild landscapes and indoor environments. Most importantly, we should and will integrate CityGenAgent into different gameplays to enable exciting interactive experiences! If any of these plans excite you, we welcome you to embark on this journey together!